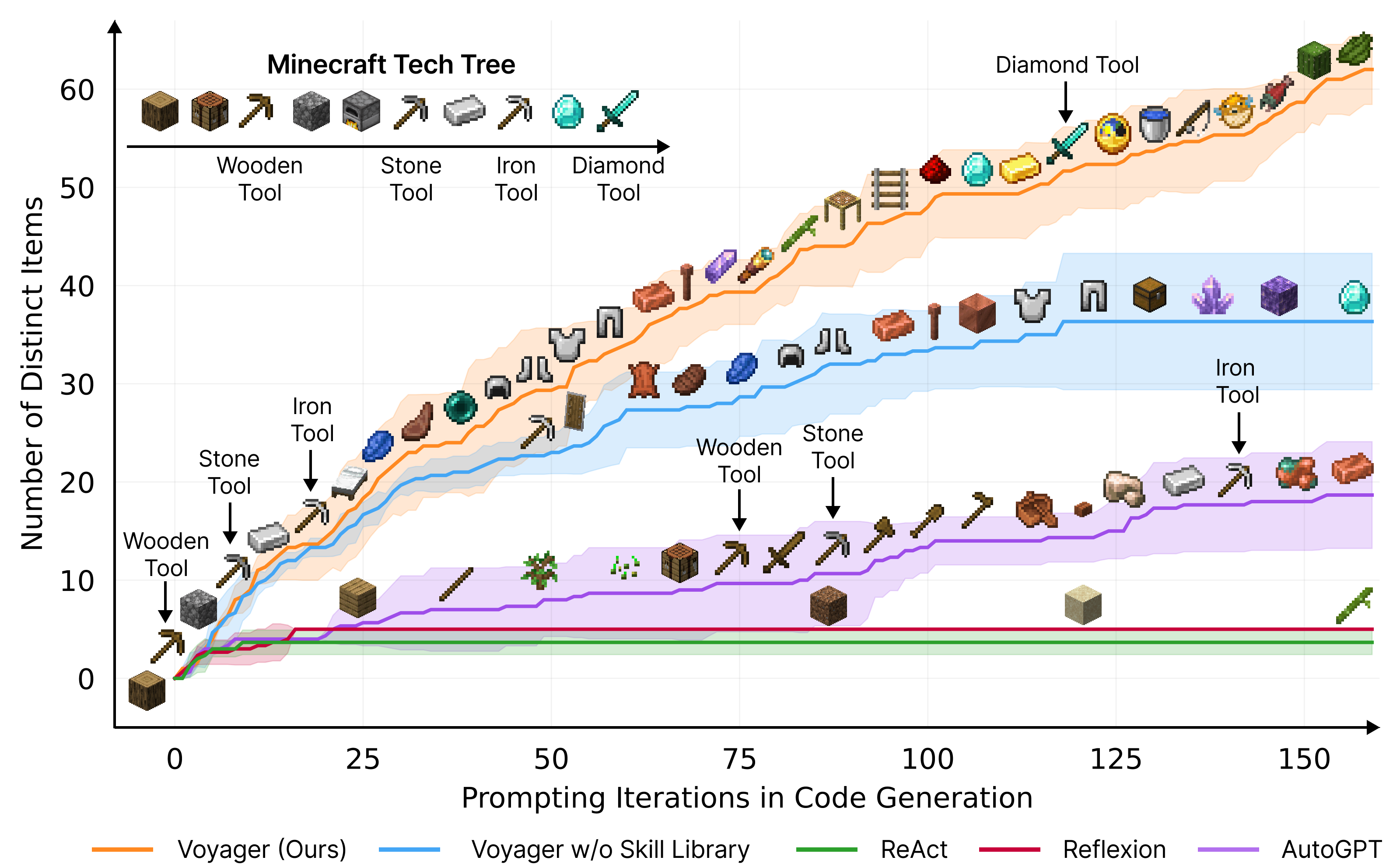

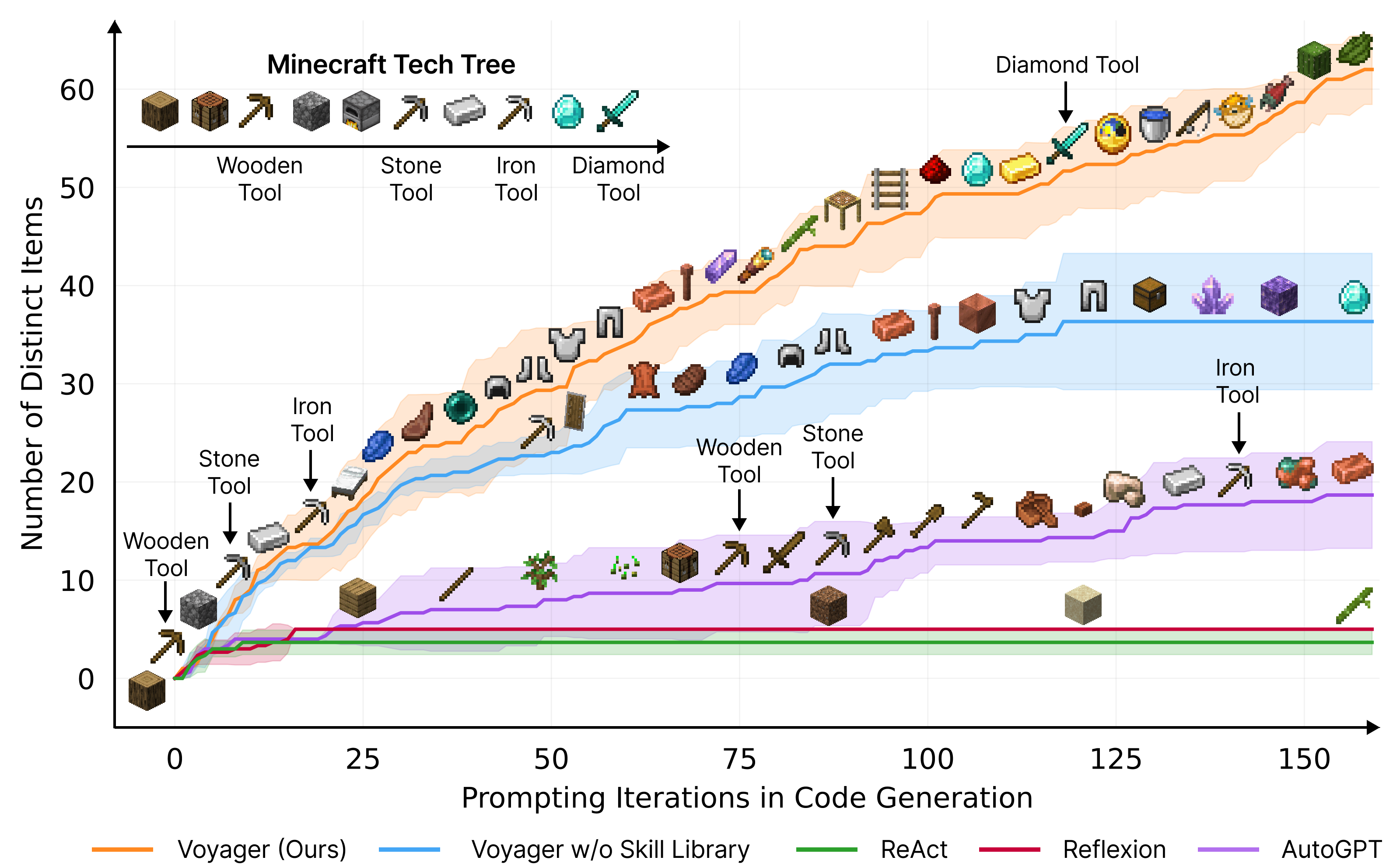

Voyager discovers new Minecraft items and skills continually by self-driven exploration, significantly outperforming the baselines.

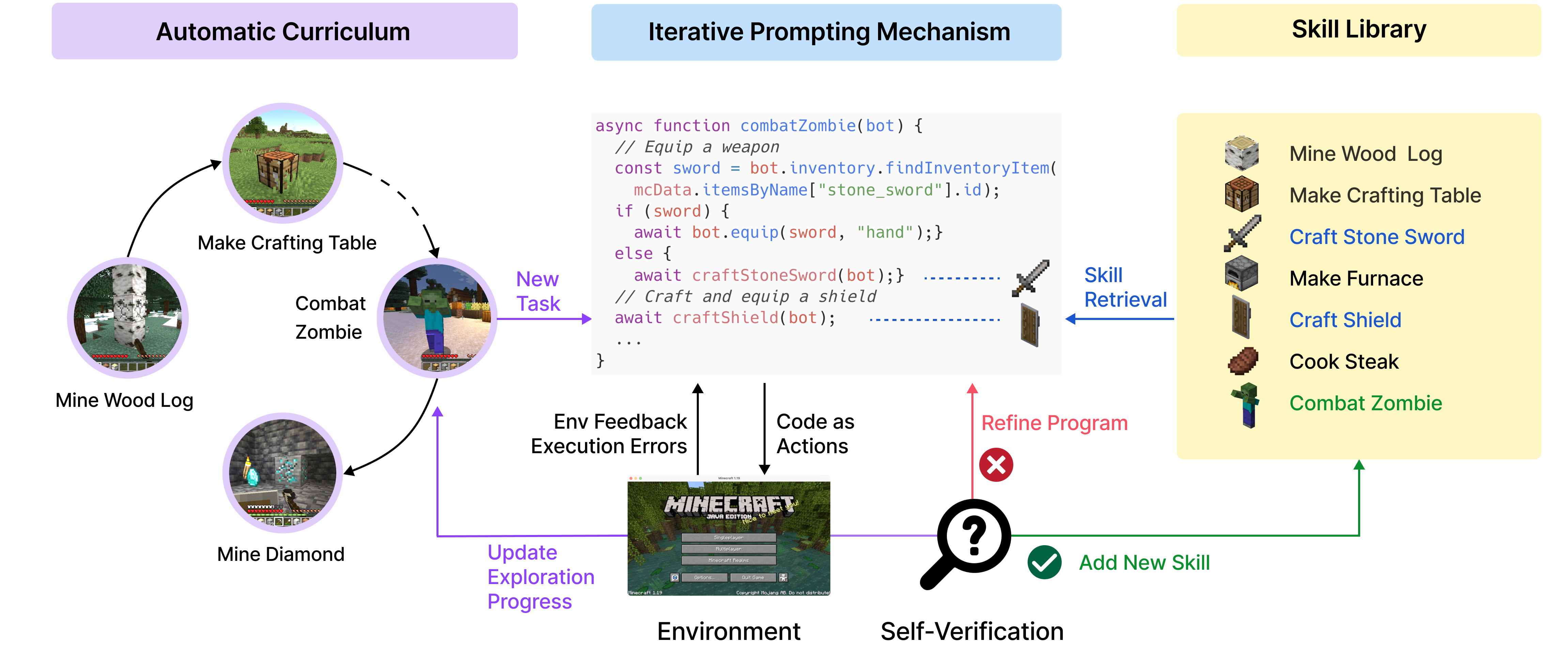

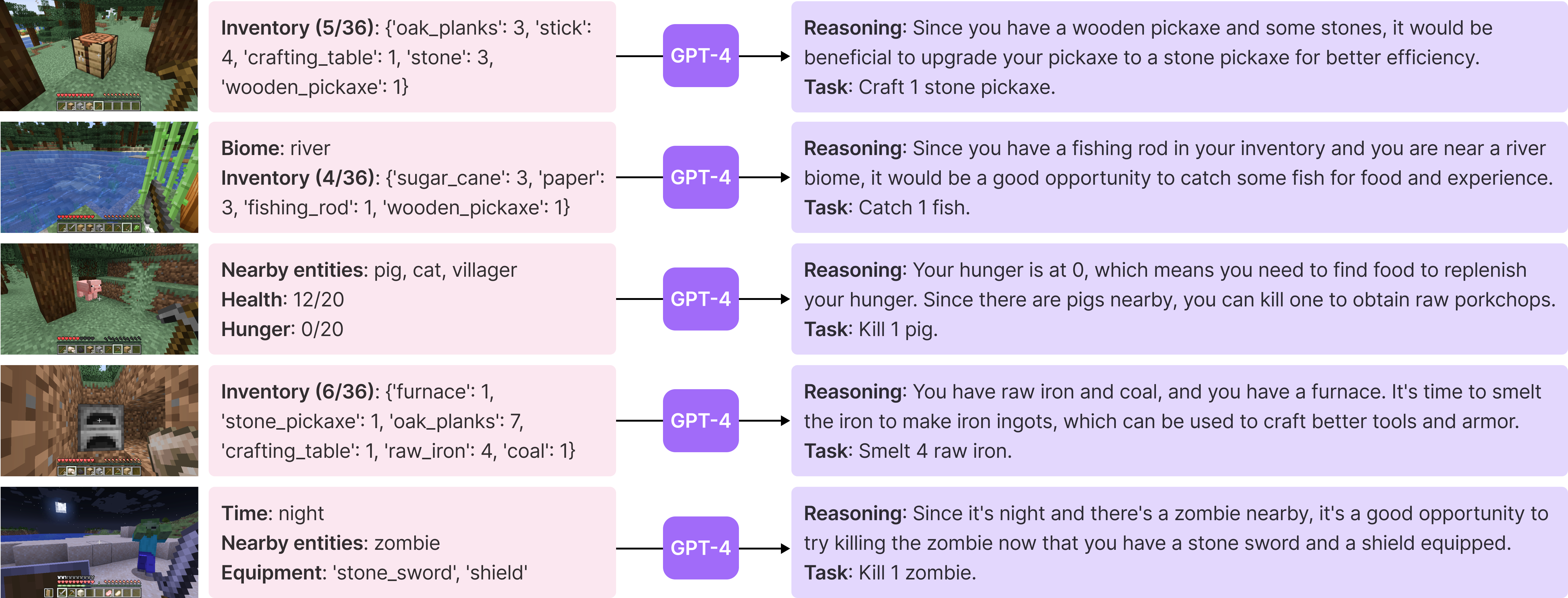

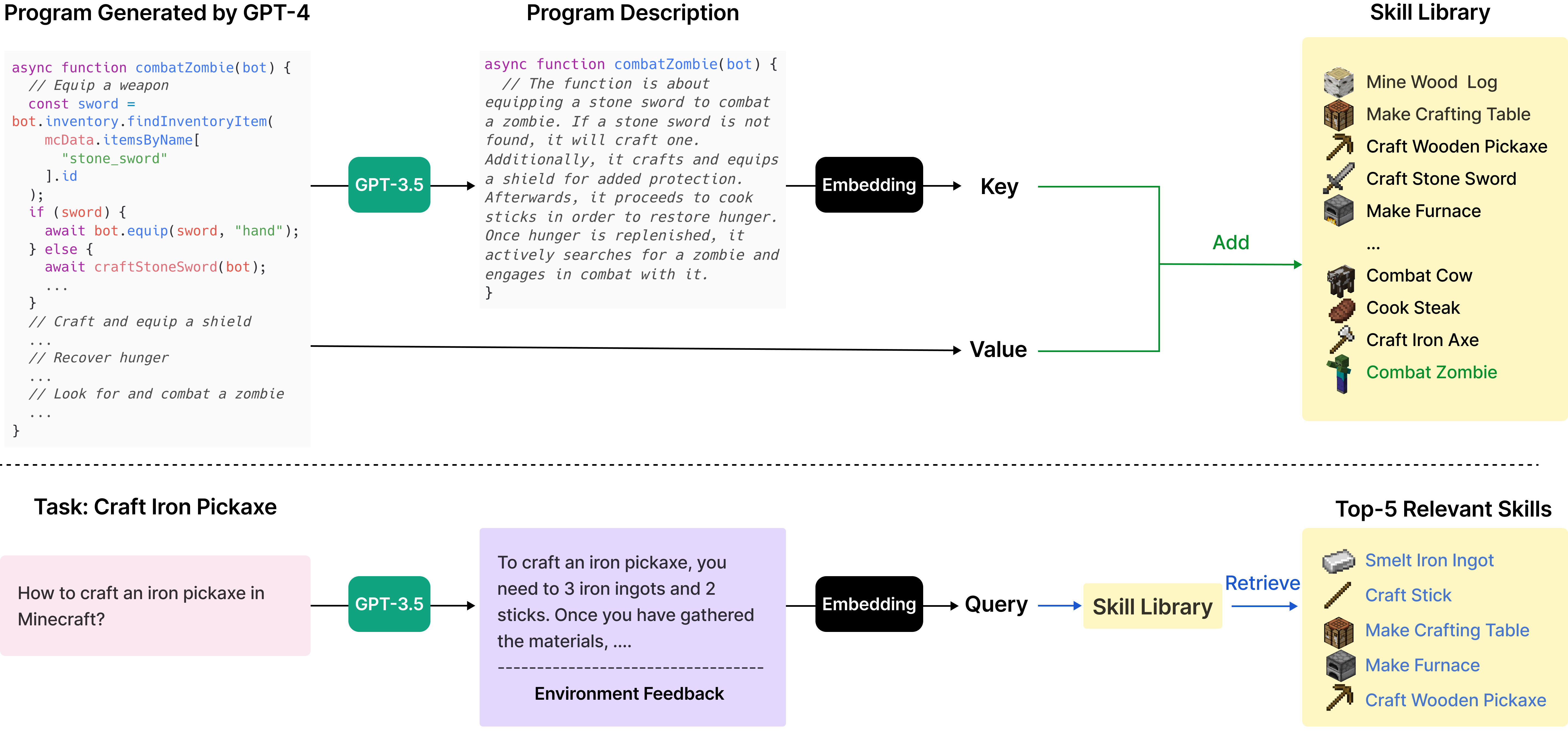

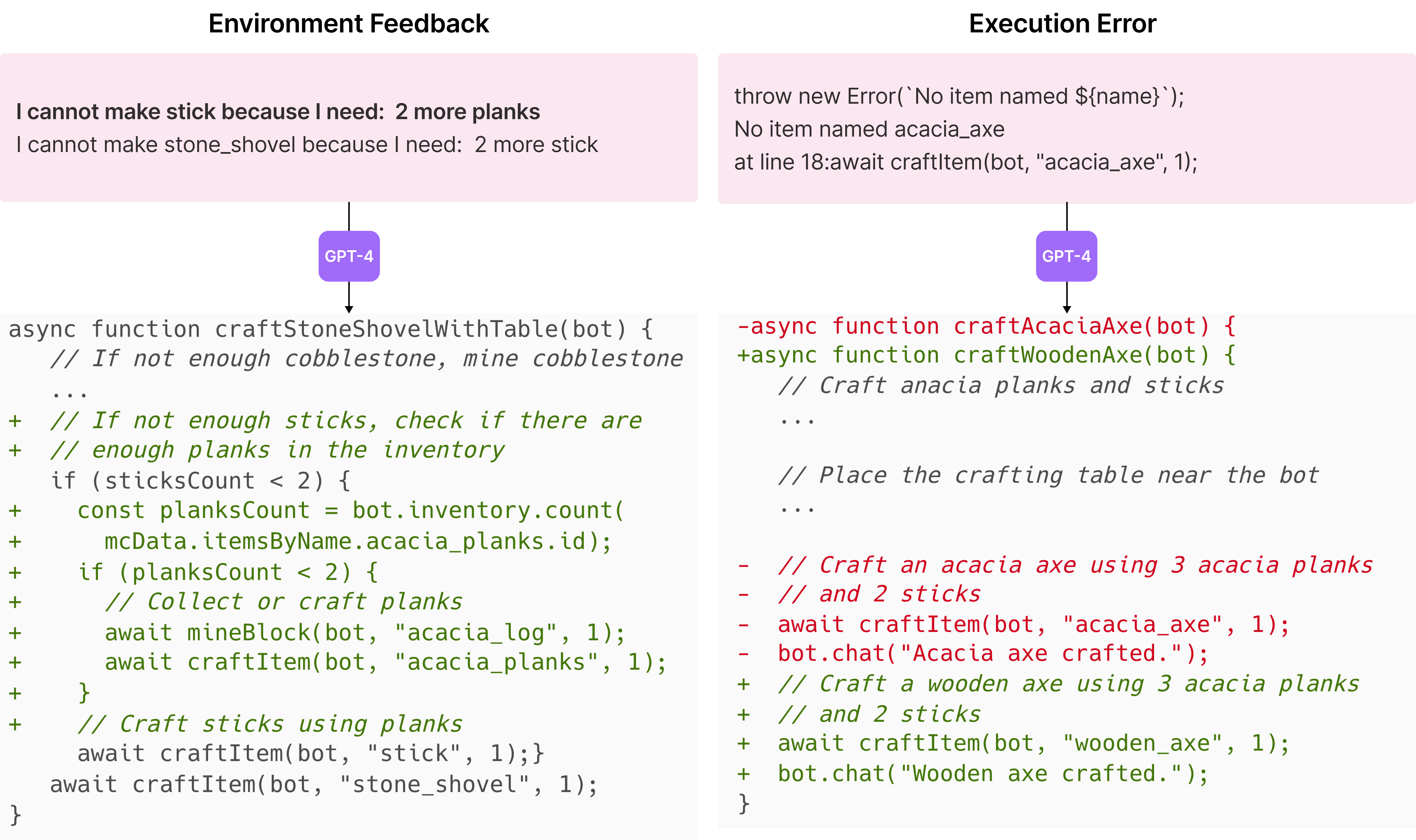

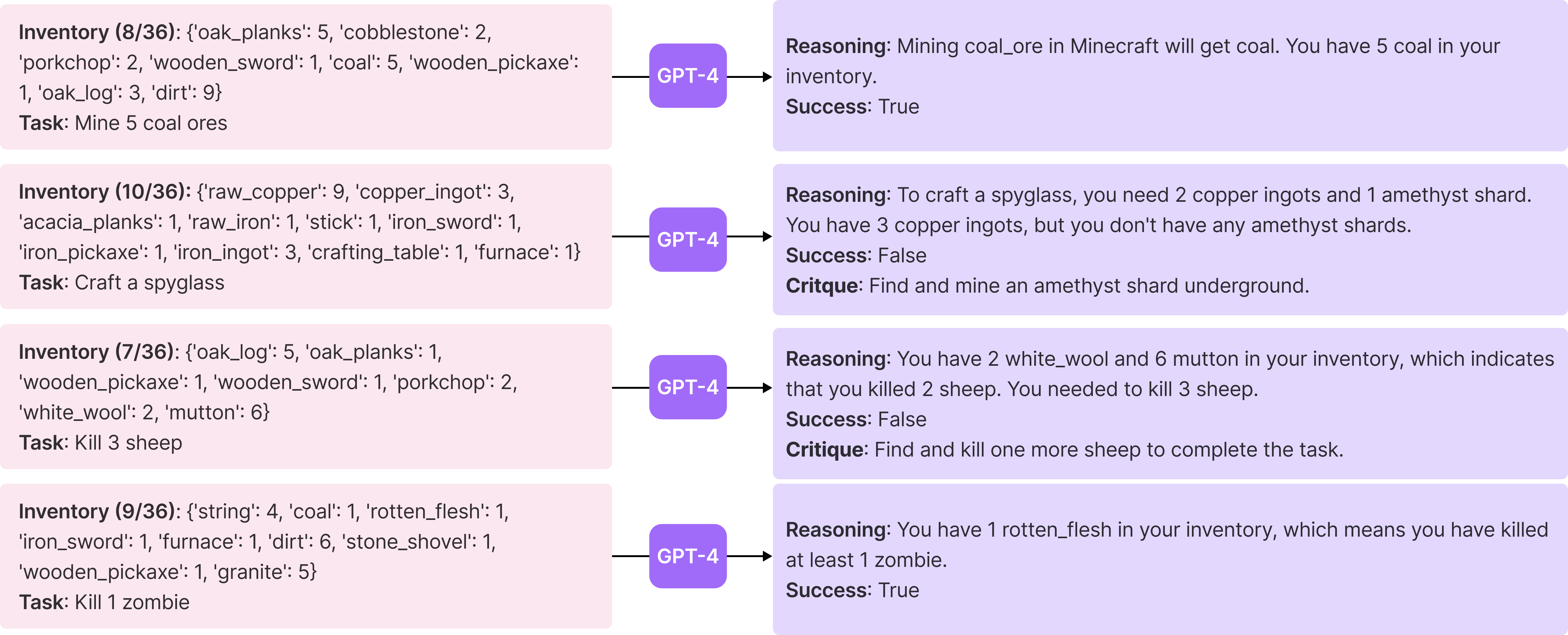

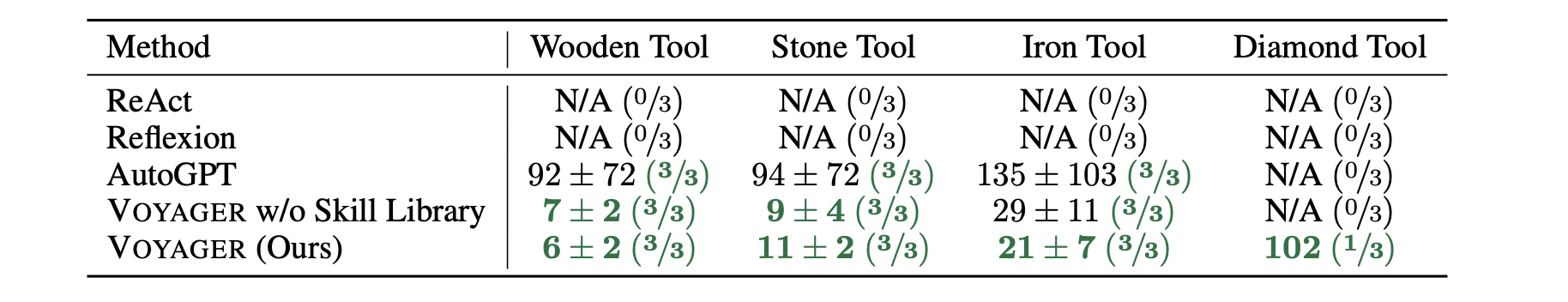

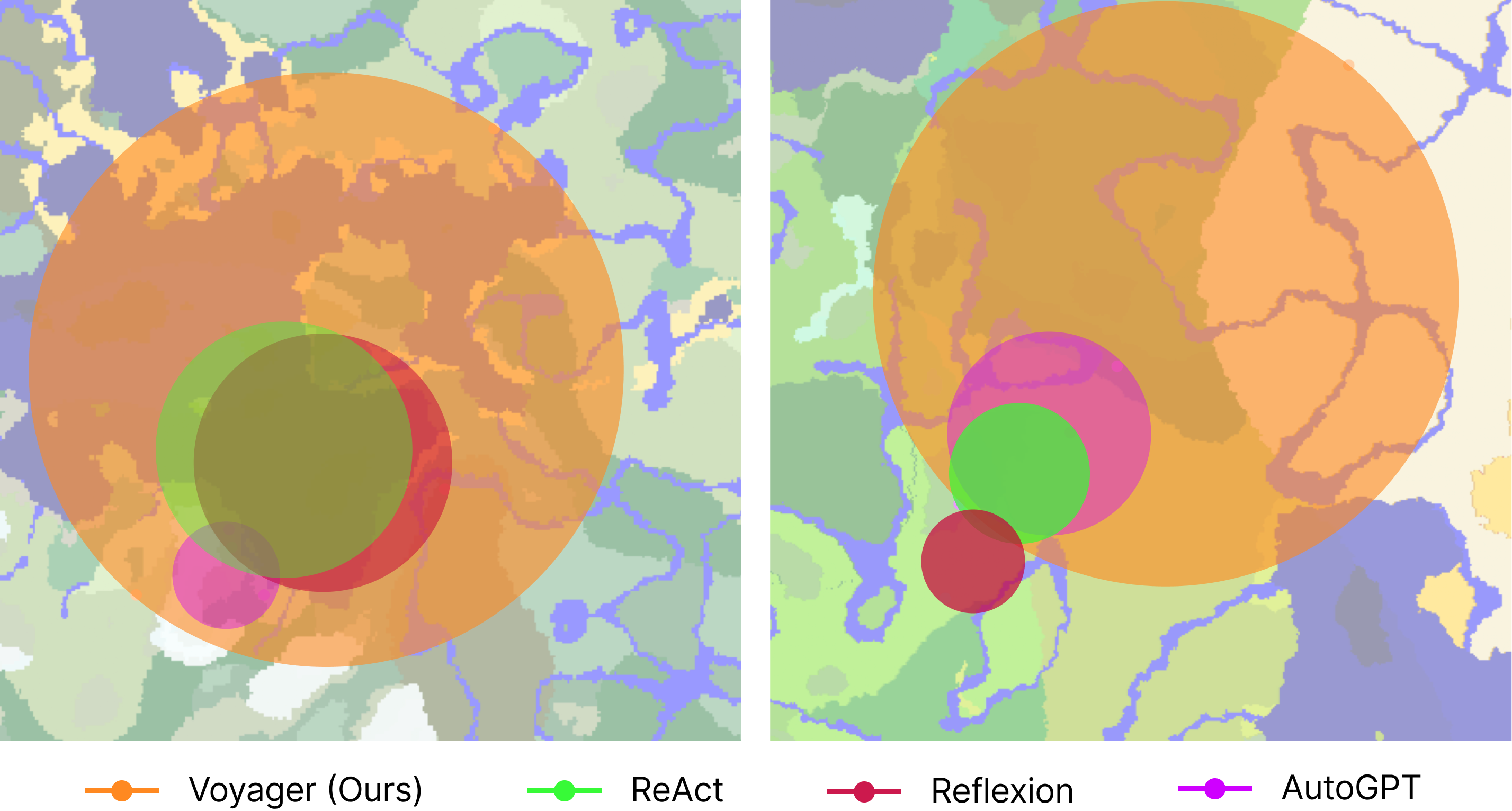

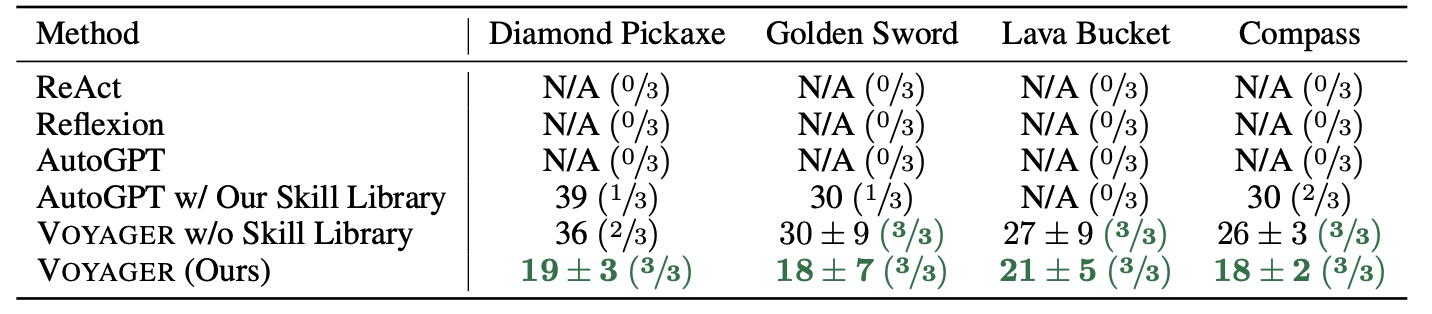

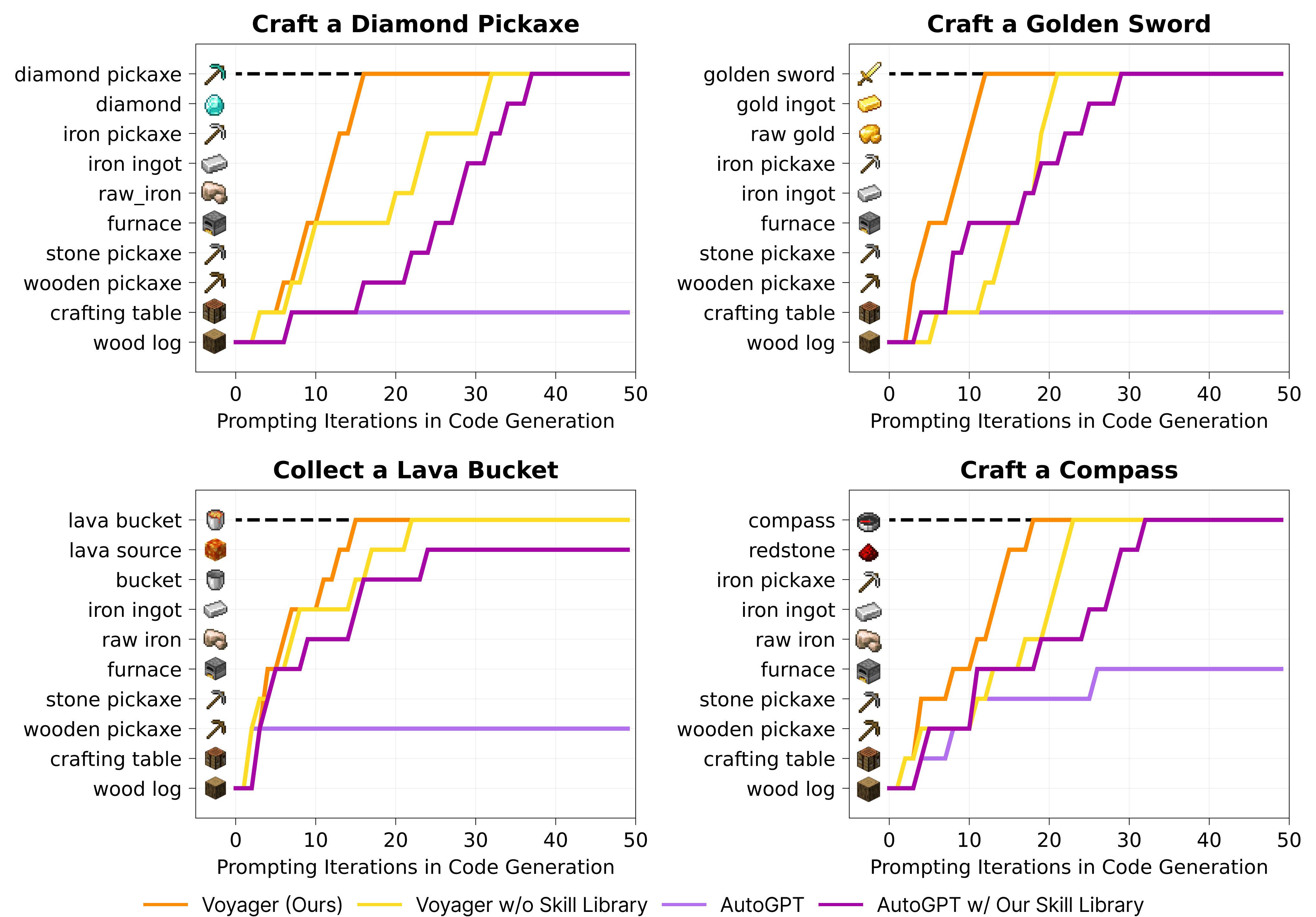

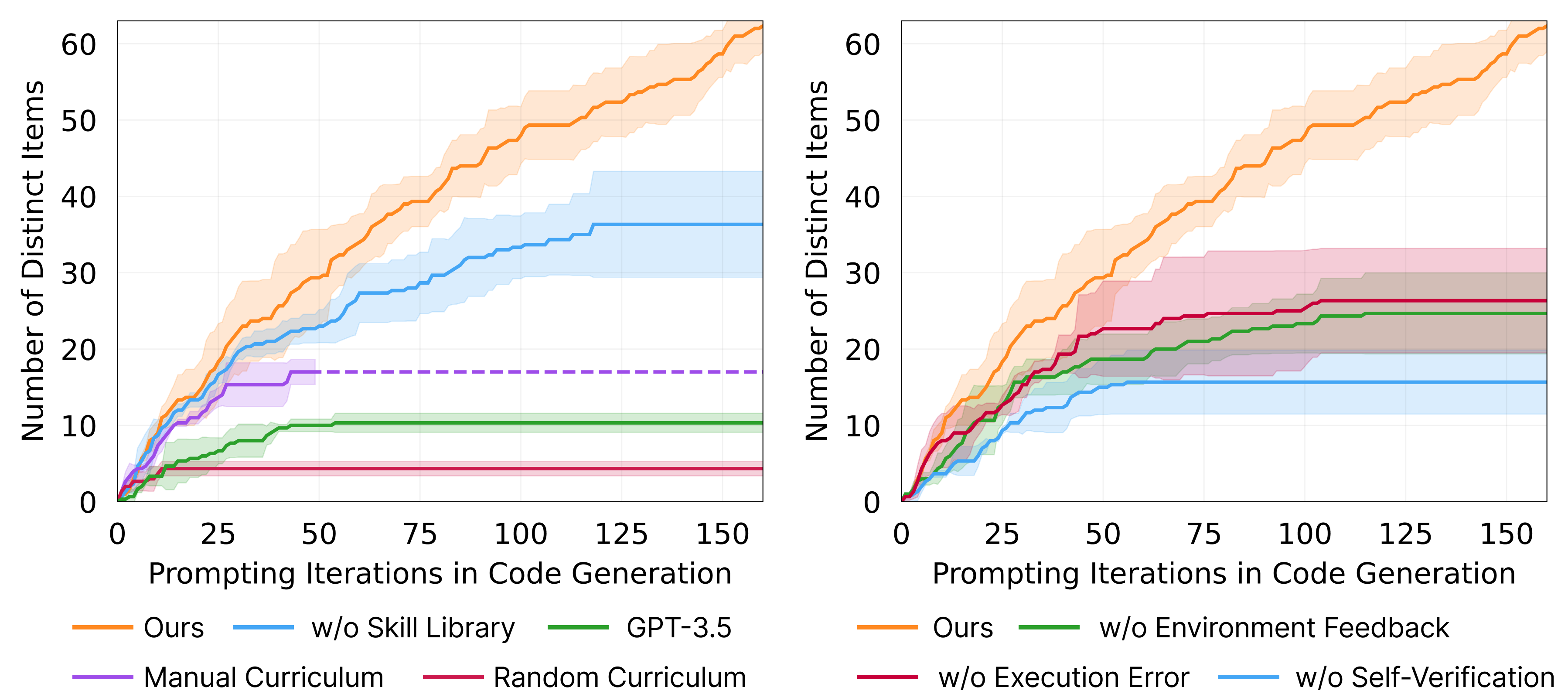

We introduce Voyager, the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyager consists of three key components: 1) an automatic curriculum that maximizes exploration, 2) an ever-growing skill library of executable code for storing and retrieving complex behaviors, and 3) a new iterative prompting mechanism that incorporates environment feedback, execution errors, and self-verification for program improvement. Voyager interacts with GPT-4 via blackbox queries, which bypasses the need for model parameter fine-tuning. The skills developed by Voyager are temporally extended, interpretable, and compositional, which compounds the agent's abilities rapidly and alleviates catastrophic forgetting. Empirically, Voyager shows strong in-context lifelong learning capability and exhibits exceptional proficiency in playing Minecraft. It obtains 3.3x more unique items, travels 2.3x longer distances, and unlocks key tech tree milestones up to 15.3x faster than prior SOTA. Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other techniques struggle to generalize.

Voyager consists of three key components:

an automatic curriculum for open-ended exploration, a skill library for increasingly complex behaviors, and an iterative prompting mechanism that uses code as action space.

Voyager consists of three key components:

an automatic curriculum for open-ended exploration, a skill library for increasingly complex behaviors, and an iterative prompting mechanism that uses code as action space.

We systematically evaluate Voyager and baselines on their exploration performance, tech tree mastery, map coverage, and zero-shot generalization capability to novel tasks in a new world.

In this work, we introduce Voyager, the first LLM-powered embodied lifelong learning agent, which leverages GPT-4 to explore the world continuously, develop increasingly sophisticated skills, and make new discoveries consistently without human intervention. Voyager exhibits superior performance in discovering novel items, unlocking the Minecraft tech tree, traversing diverse terrains, and applying its learned skill library to unseen tasks in a newly instantiated world. Voyager serves as a starting point to develop powerful generalist agents without tuning the model parameters.

"They Plugged GPT-4 Into Minecraft—and Unearthed New Potential for AI. The bot plays the video game by tapping the text generator to pick up new skills, suggesting that the tech behind ChatGPT could automate many workplace tasks." - Will Knight, WIRED

"The Voyager project shows, however, that by pairing GPT-4’s abilities with agent software that stores sequences that work and remembers what does not, developers can achieve stunning results." - John Koetsier, Forbes

"Voyager, the GTP-4 bot that plays Minecraft autonomously and better than anyone else" - Ruetir

"This AI used GPT-4 to become an expert Minecraft player" - Devin Coldewey, TechCrunch

Coverage Index:

[Atmarkit]

[Career Engine]

[Crast.net]

[Daily Top Feeds]

[Entrepreneur en Espanol]

[Finance Jxyuging]

[Forbes]

[Forbes Argentina]

[Gaming Deputy]

[Gearrice]

[Haberik]

[Head Topics]

[InfoQ]

[ITmedia News]

[Mark Tech Post]

[Medium]

[MSN]

[Note]

[Noticias de Hoy]

[Ruetir]

[Stock HK]

[Tech Tribune France]

[TechCrunch]

[TechBeezer]

[Toutiao]

[US Times Post]

[VN Explorer]

[WIRED]

[Zaker]

@article{wang2023voyager,

title = {Voyager: An Open-Ended Embodied Agent with Large Language Models},

author = {Guanzhi Wang and Yuqi Xie and Yunfan Jiang and Ajay Mandlekar and Chaowei Xiao and Yuke Zhu and Linxi Fan and Anima Anandkumar},

year = {2023},

journal = {arXiv preprint arXiv: Arxiv-2305.16291}

}